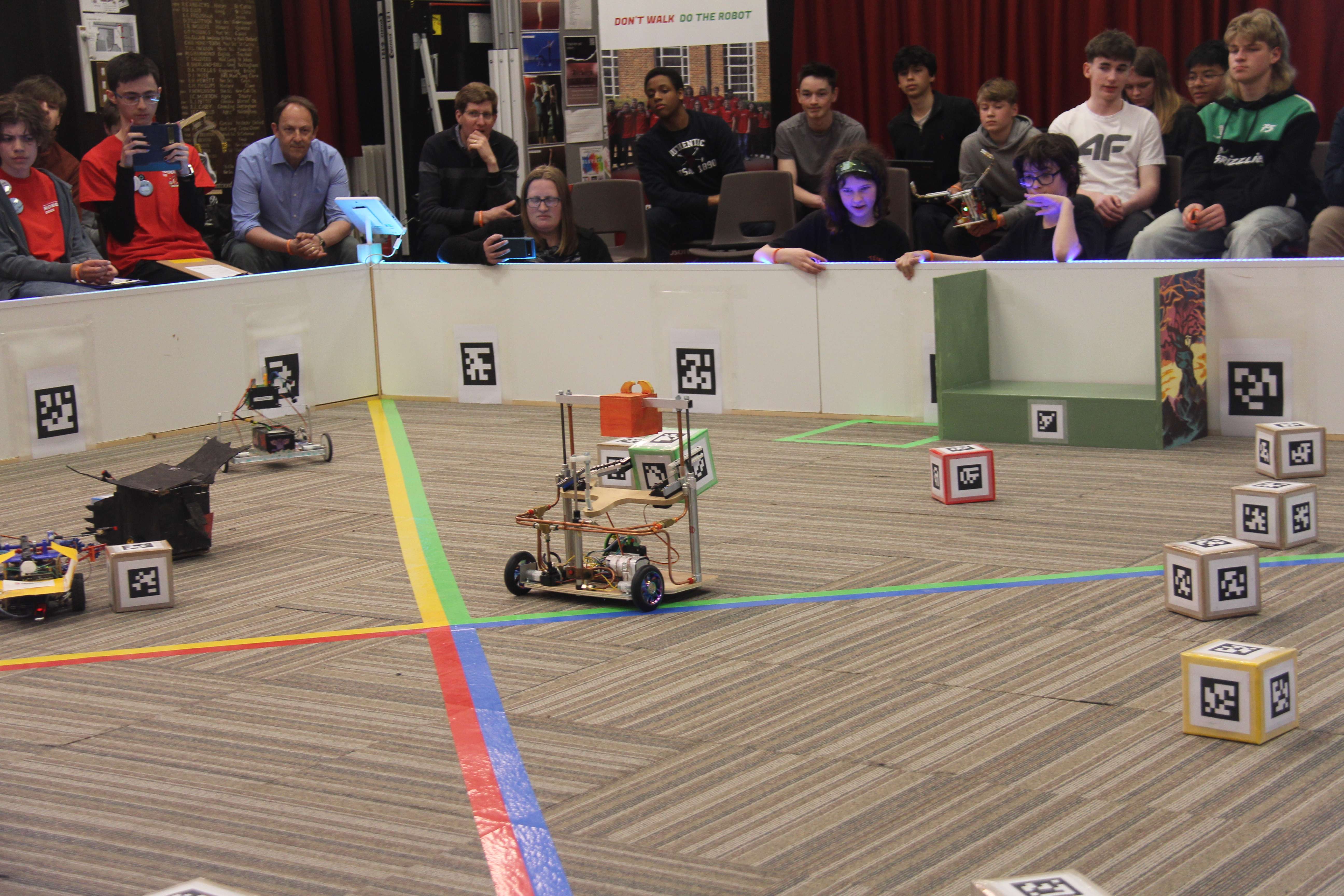

RoboCon 2025 Dragon's Lair

Congratulations to all the teams who competed in our last competition, RoboCon 2025 - Dragon's Lair! Read about the competition full of sheep, gems and epic robot dragons!

Read About It...

Latest News

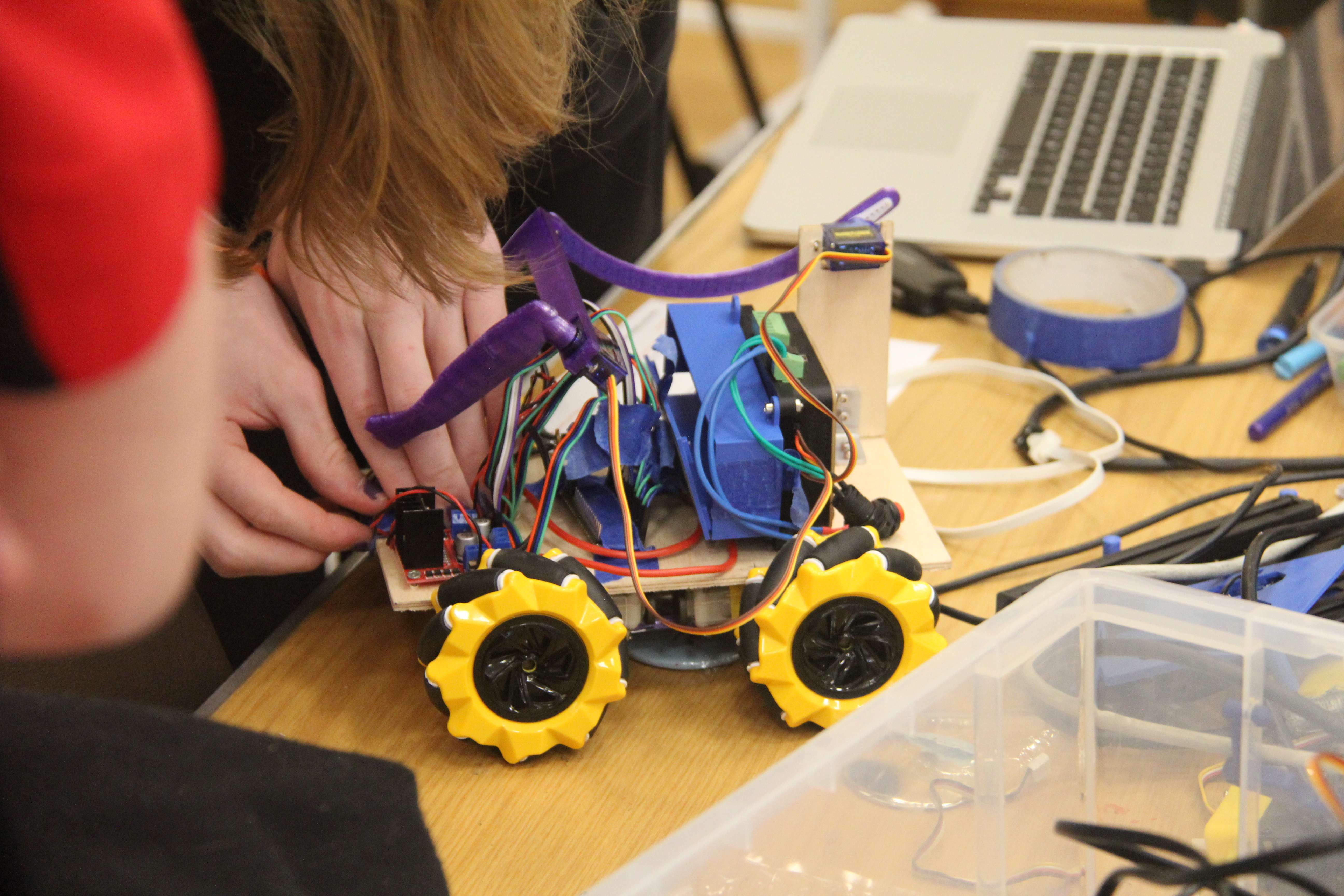

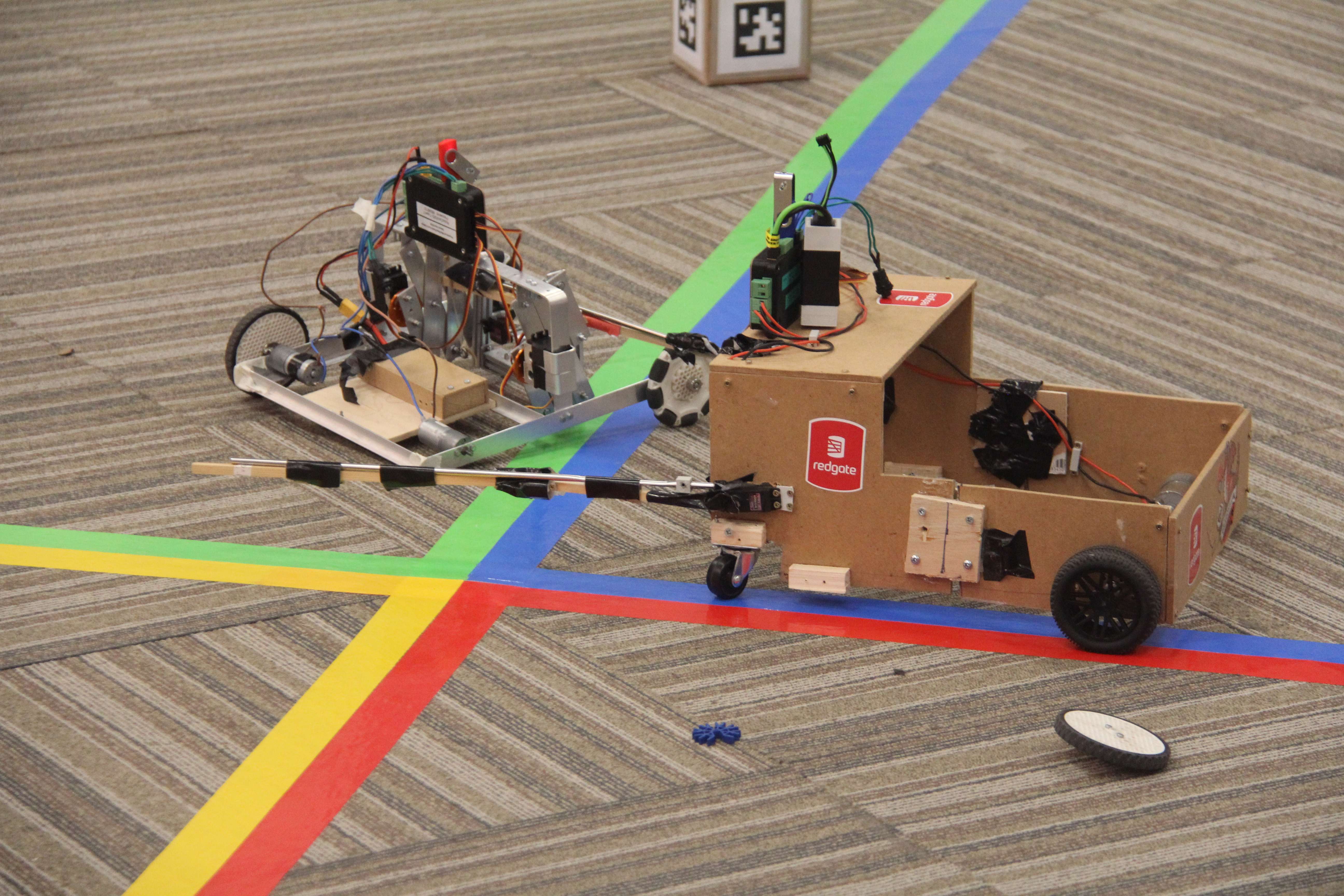

RoboCon 2025 Review

Read about this year's competition full of sheep, gems and epic robot dragons!

Read More...Last updated: 14 April 2025

A Fiery RoboCon 2025 Kick-Off

Today we launched RoboCon 2025 with our fiery new competition!

Read More...Last updated: 2 November 2024

RoboCon 2024 Review

Read all about this year's "Hot Potato" themed competition, the results, and the highlights from the event!

Read More...Last updated: 11 April 2024